Prologue

At the end of the 20th century Ben Kacyra revolutionised 3D measurement with the launch of the Cyrax LiDAR terrestrial scanner which could measure a Point Cloud of millions of 3D points to mm accuracy within minutes.

The colours attributed to the points was determined by the strength of the returned laser signal and to add real world colours to the points a 360° panorama was taken so that the NPP (No Parallax Point) of the camera was co-centric with the centre of rotation of the scanner following the procedure described in “Spherical Panoramas for HDS Point Clouds” and “Using 360° Panoramas to add Colour to HDS Point Clouds”.

The colours attributed to the points was determined by the strength of the returned laser signal and to add real world colours to the points a 360° panorama was taken so that the NPP (No Parallax Point) of the camera was co-centric with the centre of rotation of the scanner following the procedure described in “Spherical Panoramas for HDS Point Clouds” and “Using 360° Panoramas to add Colour to HDS Point Clouds”.

At the time the recommended equipment to create the 360° panoramas was a DSLR fitted with an 8mm fisheye lens mounted on a Nodal Ninja NN3 panoramic head with an appropriate vertical spacer to ensure that the centre of rotation was coincident with that of the scanner.

One of my roles in Leica Geosystems was to support and train on this application and Customers were soon asking if other lenses could be used, so I tested a variety of different ones including, but not limited to, a 10.5mm fisheye, the 10mm end of a 10-20mm zoom lens and the 18mm end of an 18-200mm zoom.

It is imperative that the correct colour is assigned to each point in the Point Cloud so a test site with a number of hard edges was selected and in every case the correct colour was was achieved using the different lenses proving the accuracy of the 360° panoramas stitched with PTGui.

This suggested that a 360° panorama could be effectively used as a “photographic Theodolite” although the accuracy would be limited by the resolution of the panorama.

The advantages of this are that the equipment need to create a 360° panorama cost considerably less than a Theodolite or Total Station, which in turn cost considerably less than a LiDAR scanner, and that additional measurement could be taken without the need to revisit the site as would be the case with a Theodolite or Total Station.

Today there is a plethora of multi-lens cameras for capturing 360° panoramas which have the advantage of a single shot rather than taking a set of shots, but would these have the accuracy required for measurement as there are two fundamental differences between the two methods.

Today there is a plethora of multi-lens cameras for capturing 360° panoramas which have the advantage of a single shot rather than taking a set of shots, but would these have the accuracy required for measurement as there are two fundamental differences between the two methods.

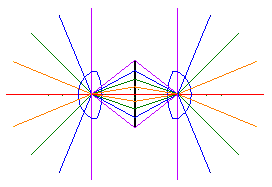

A camera with a single lens mounted on a panohead and rotated about the NPP (No Parallax Point, sometimes called the front Nodal Point or Entrance Pupil) or usually the best estimate of the NPP as this often varies according the angle of incidence of the incoming ray as shown in “Finding the Nodal Point of a Lens”, but with a multi-lens camera this is physically impossible as shown in the schematic.

A camera with a single lens mounted on a panohead and rotated about the NPP (No Parallax Point, sometimes called the front Nodal Point or Entrance Pupil) or usually the best estimate of the NPP as this often varies according the angle of incidence of the incoming ray as shown in “Finding the Nodal Point of a Lens”, but with a multi-lens camera this is physically impossible as shown in the schematic.

The images taken with the camera on a panohead are taken with plenty of overlap for Control Point generation and stitched with software such as PTGui, whilst those from a multi-lens camera usually have very limited overlap and are joined using the known geometry of the camera by bespoke software.

Investigation

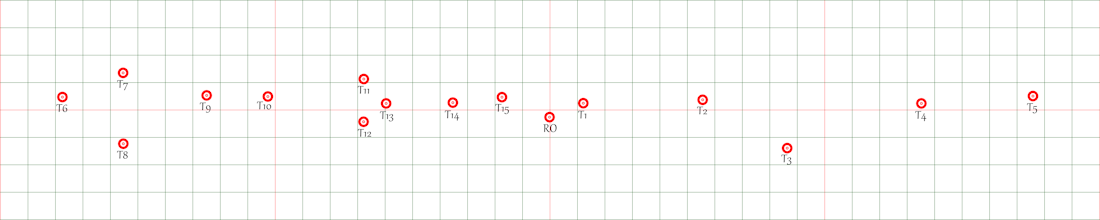

In order to investigate whether a multi-lens camera would provide a 360° panorama of the same accuracy as a single lens camera rotated about its NNP I set up fifteen random targets and used a sturdy Wild (Leica Geosystems) tripod with a forced centering Tribrach to ensure that all three sets of equipment I was using would have exactly the same vertical axis of rotation.

To provide the “bench mark” angles I first measured all the targets with a Kern DKM2-A 1” Theodolite.

To provide an example of using a fisheye lens on an FX DSLR I used a 10.5mm and 8mm lens mounted on a Nodal Ninja R1 and took a set of 4 shots round and 6 shots round with each lens. These were stitched with PTGui.

I then took eight panoramas with a Samsung Gear 360 duel-lens camera mounted on a Nodal Ninja NN3, each set at 45° to the previous one. These were joined using Gear 360 ActionDirector.

Results

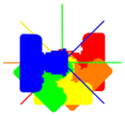

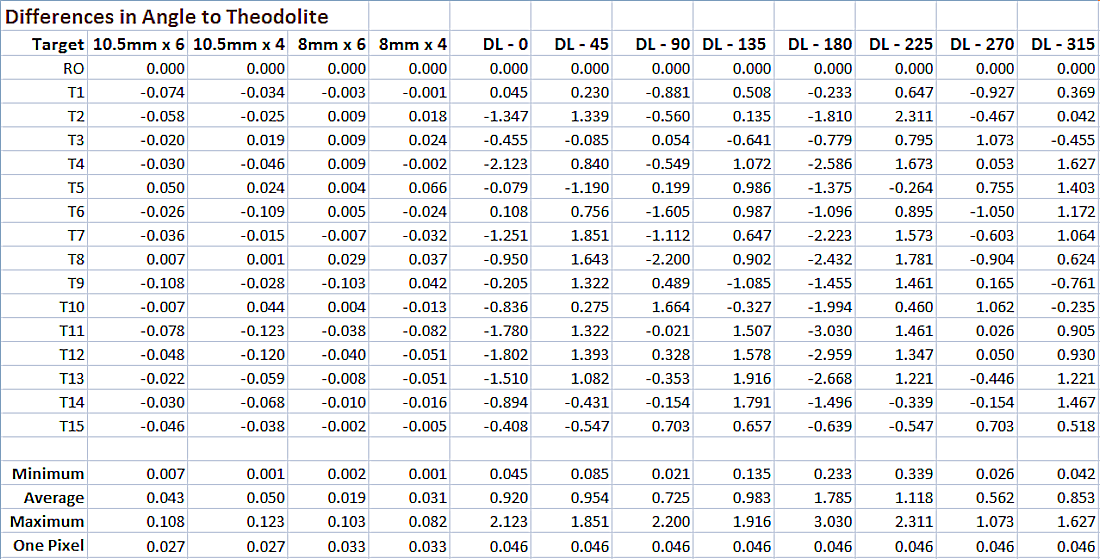

The results show a marked difference between the single lens and duel-lens cameras.

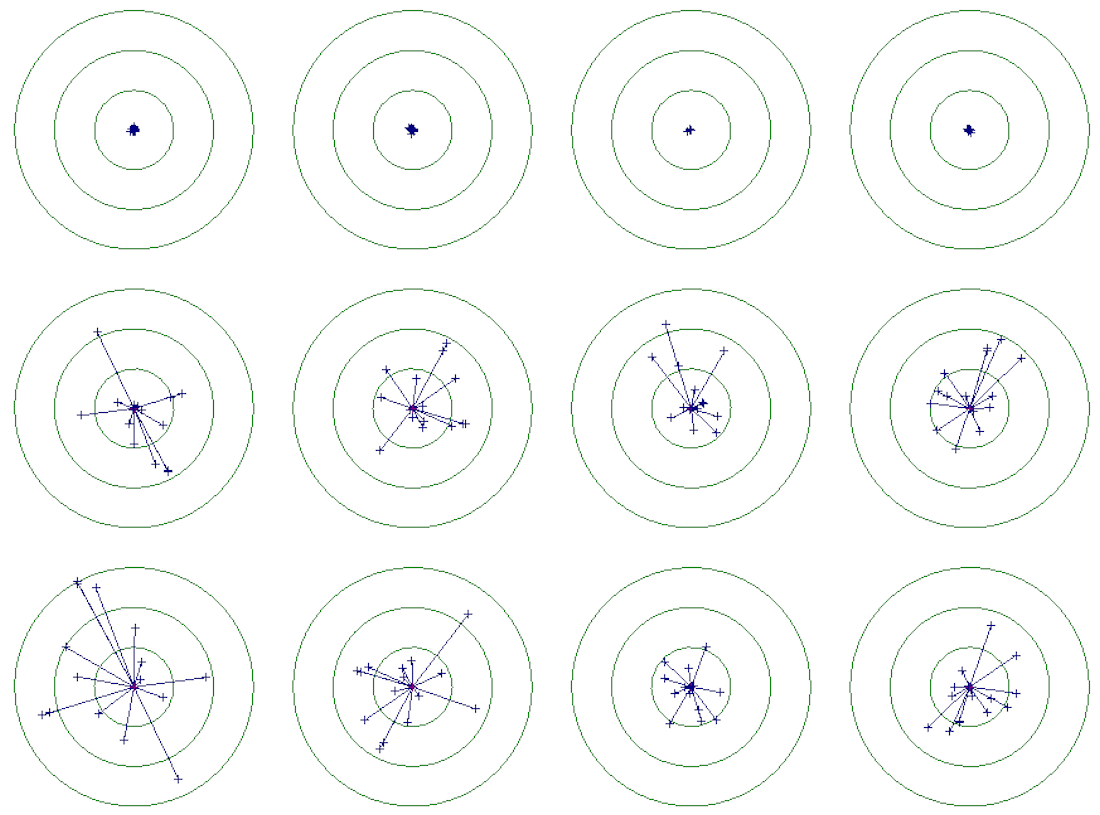

The diagram above shows the difference in angles graphically with the green circles showing 1, 2 and three degrees of arc.

The top row shows the results a single lens rotated about its NPP starting from the left; 10.5mm fisheye 6 shots round and 4 shots round, 8mm fisheye 6 shots round and 4 shots round.

The other two rows the results from the Samsung Gear 360 with the principal ray from the front lens pointing upwards in each case.

Most lenses have some radial distortion, such as pincushion or barrel, which would be noticeable when looking at photographs containing straight lines, but not when looking at a landscape with no straight lines, but if such a lens was to be used for measurement (e.g. Photographic Intersection) then it would need to be calibrated.

As the primary function of a 360° panorama is visual, small amounts of distortion would not be noticed when viewing the panorama, but are significant if the panorama is to be used for measurement.

Conclusion

This investigation suggests that if 360° panoramas are to be used for measurement then it is best to go with rotating the camera about the NPP of the lens rather than use a multi-lens camera, unless such a camera is calibrated for measurement.

For this investigation the Samsung Gear 360 was rotated about its central axis with eight shots round at 45° intervals.

As each image could be split into the left and right hemispheres this provided four sets of four shots round at 90° providing 50% overlap for Control Point generation for processing in PTGui, two from the front lens and two from the rear lens, and the results are shown on Samsung Gear 360 – Further Investigation.![]()

|

Samsung Gear 360 – Further Investigation |

|

|

Samsung Gear 360 + Insta360 ONE X |